Can Humans Stay Relevant?

Disclaimer

Before we dive deep into my thought process, I would like to mention one very important thing here. I am in no way an expert. I don't even claim to be one. I am just a curious person who is trying to understand the current progress and how it will affect our future. And to question whether AI can really become as smart as a human and whether it will make us obsolete?

Why is it even a question?

We are living in an exciting time. The progress in AI is exponential. It's implications, and the signs it's showing warrant this question now more than ever. We do not completely understand the technology. We do understand that if a model is trained on a large enough dataset, using the transformer model, it starts to show signs of intelligence. But to which level? and how will this impact us is a big question.

In the research paper GLU Variants Improve Transformer. It has been mentioned that.

We have extended the GLU family of layers and proposed their use in Transformer. In a transfer-learning setup, the new variants seem to produce better perplexities for the de-noising objective used in pre-training, as well as better results on many downstream language-understanding tasks. These architectures are simple to implement, and have no apparent computational drawbacks. We offer no explanation as to why these architectures seem to work; we attribute their success, as all else, to divine benevolence.

Given all the surrounding evidence, I don't think this question is without merit.

What is human intelligence?

According to Encyclopedia Britannica

human intelligence, mental quality that consists of the abilities to learn from experience, adapt to new situations, understand and handle abstract concepts, and use knowledge to manipulate one’s environment.

In my opinion, it won't be objectionable if we also include creativity in this definition. After all, that is part of our identity.

Mapping Human Intelligence to AI Models: Large Language Models (LLMs)

If we just limit the definition of human intelligence to learning from experience, adapting to new situations, understanding and handling abstract concepts, and using knowledge to manipulate one’s environment. Then it's safe to say that current LLMs are either showing sparks of this level of intelligence or are already there.

Learn from experience

In the following video, you can clearly see that GPT-4 is very much capable of self-reflection and learning from experience.

Understand and handle abstract concepts

In the research paper Sparks Of AGI: Early Experiments With GPT-4, it has been clearly stated that GPT-4 is quite capable of handling abstract ideas.

We have also shown that GPT-4 is able to handle abstract and novel situations that are not likely to have been seen during training, such as the modernized Sally-Anne test and the ZURFIN scenario. Our findings suggest that GPT-4 has a very advanced level of theory of mind.

You can also watch a high-level overview of the research paper in the video below.

Adapt to new situations

While GPT-4 does not specifically excel in this department, it certainly can do a well enough job with some surrogate help (e.g. a human).

The following 2 quotes are taken from the same paper Sparks Of AGI: Early Experiments With GPT-4.

While it is clearly not embodied, the examples above illustrate that language is a powerful interface, allowing GPT-4 to perform tasks that require understanding the environment, the task, the actions, and the feedback, and adapting accordingly. While it cannot actually see or perform actions, it can do so via a surrogate (e.g., a human). Having said this, we acknowledge the limitation that we only tested GPT-4 on a limited number of games and real-world problems, and thus cannot draw general conclusions about its performance on different types of environments or tasks. A more systematic evaluation would require a larger and more diverse set of real world problems where GPT-4 was actually used in real-time, rather than retrospectively.

And

Continual learning: The model lacks the ability to update itself or adapt to a changing environment. The model is fixed once it is trained, and there is no mechanism for incorporating new information or feedback from the user or the world. One can fine-tune the model on new data, but this can cause degradation of performance or overfitting. Given the potential lag between cycles of training, the system will often be out of date when it comes to events, information, and knowledge that came into being after the latest cycle of training.

The main bottleneck discussed here is its inability to keep itself up to date. The current cut-off date for GPT-4 is September 2021. Anything that happens after that will not be known to GPT-4 unless it is retrained.

To solve this problem, Open AI recently announced plugins that can help keep the main LLM up-to-date.

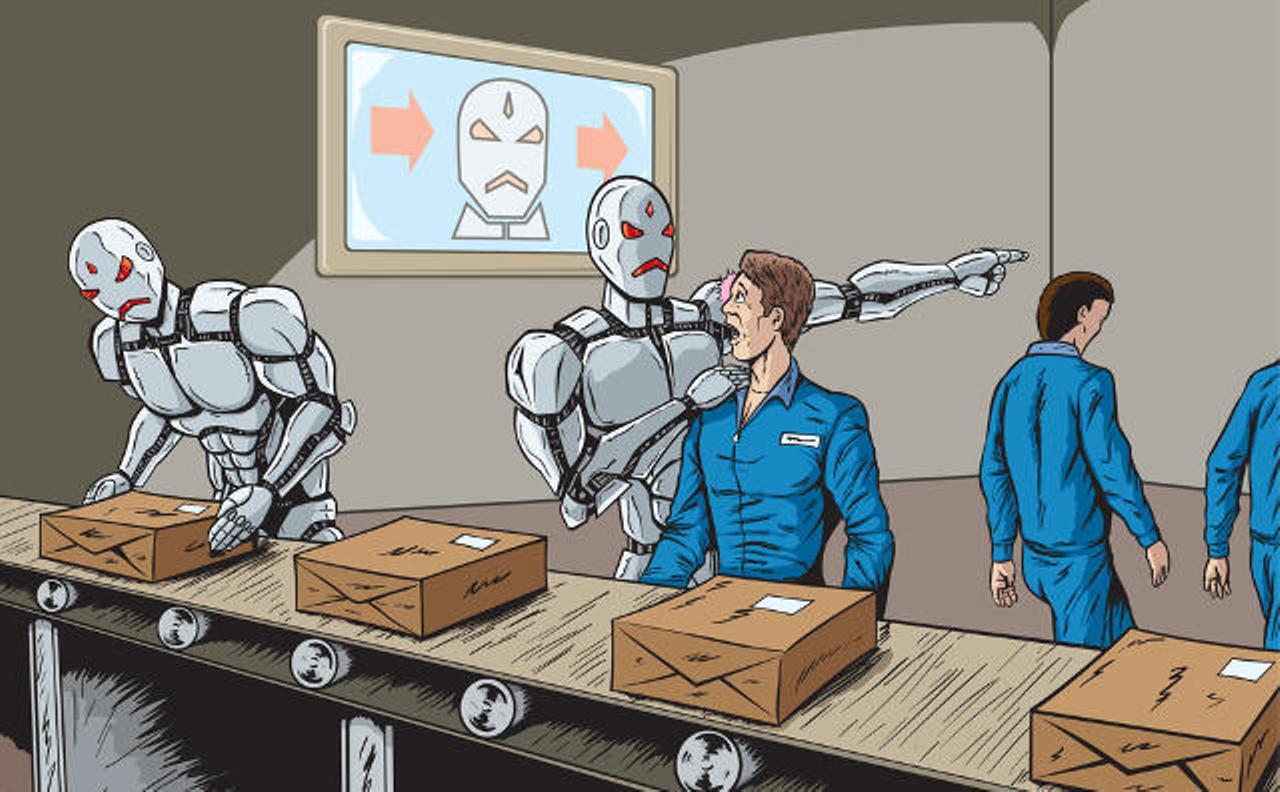

How can we differentiate ourselves from LLMs?

Given the information above, one must question. How can we differentiate ourselves from LLMs? What is that one thing that we can do and LLMs can't? is it even possible to keep the unique value humans present? or will we become obsolete?

It's not just a matter of one person asking this question. There are thousands and thousands of people asking about it. Even the experts who are actively working are aware of this problem and I have to say, this does raise an existential crisis for us.

Even I as a developer, whose entire job is built on top of knowledge work ask myself this question every day. And that's why I would like to give my 2 cents here.

Creativity

I believe the only way for us to distinguish ourselves moving forward is to introduce creativity in society. Many educational institutes focus more on children's ability to memorize things rather than their ability to think creatively. Even if we look around us, we have fallen into the trap of lesser knowledge work and more just mindlessly following the trends. This is a huge problem.

One can raise the question that if GPT-4 is showing signs of its understanding of the theory of mind.

There are tools out there like Midjourney that prove the creativity of these AI systems. And it can even win competitions.

Then how can I definitely say that creativity is the only thing that can differentiate us from LLMs?

Well, to be honest, I can't. I am trying to make sense of all of it but what I can deduce from our history of evolution is our natural tendency to adapt to new situations to survive. I think that it is our best course of action. Given any non-creative job will be very easily automated by these models, the only other logical conclusion is that we need to focus on creativity.

I firmly believe that a future where we as humans don't feel obsolete is only possible if we focus on nurturing creativity. Make it a priority for children and focus less on repetitive tasks. Computers are excellent at automating repetitive tasks, and they are going to only become better in the future.

Professors from Stanford believe this exact thing as well. If we want to survive this AI revolution, we need to augment ourselves with its capabilities. Use it to our advantage. Be the creative brain that this incredibly powerful beast requires.

If we are going to continue down this path of following the trends, using herd mentality and using less and less creativity, then we are going to be replaced. No doubts about it. Evolution agrees with me on this as well. We evolved into this technical age over time. We built our intuitions, instincts and creativity over time. A cave man could never survive in this day and age. We need to stop being the cave man of 21st century and start embracing, adapting and augmenting ourselves with this technology.